Once data has been ingested, transformed, and aggregated, the next step will be to analyze and explore it. There are many tools available on the market to achieve this, and one of the most popular is Databricks.

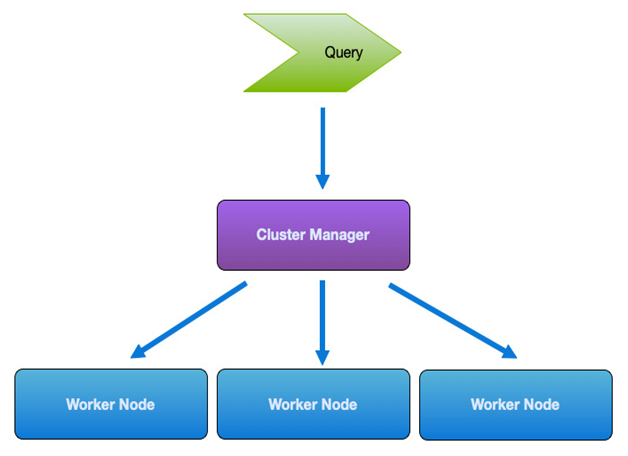

Databricks uses the Apache Spark engine that is well suited to dealing with massive amounts of data due to its internal architecture. Whereas a traditional database server would typically run workloads, Databricks uses Spark clusters built from multiple nodes. Data analytics processes are then distributed between those nodes to process them in parallel, as shown in the following diagram:

Figure 13.6 – Example Spark cluster architecture

Azure Databricks is a managed Databricks service that provides excellent flexibility for creating and using Spark clusters as and when needed.

Azure Databricks

Azure Databricks provides workspaces that multiple users can use to build and run analytics jobs collaboratively. A Databricks workspace contains notebooks, which are web-based interfaces for running commands executed on a Spark cluster.

Notebooks are written using either Python, SQL, R, or Scala programming languages. Depending on the type of analytical work being performed, you can choose between different Databricks runtimes. These are listed here:

- Databricks: A general-purpose runtime using Apache Spark, with additional components for performance, security, and usability on the Azure platform.

- Databricks Runtime for Machine Learning (Databricks Runtime ML): Using Databricks at its core but optimized for machine learning (ML) and data science. It includes popular ML libraries such as TensorFlow, Keras, PyTorch, andXGBoost.

- Databricks for Genomics: A highly specialized runtime built for working with biomedical data.

- Databricks Light: A cutdown version of the full Databricks runtime. Particularly suited to smaller jobs that don’t require as much performance or reliability.

When you create a Databricks notebook, you can choose the type and size of the cluster that it will run on. Notebooks run as workloads on a cluster and can be one of two types, as outlined here:

- Data engineering is for scheduled workloads—for example, jobs that perform specific tasks and that will be repeatedly used. Clusters are created and destroyed as required.

- Data analytics, which is for interactive workloads and runs on a general processing cluster. These workloads are run when you are working in a notebook to develop models and analytics.

Azure Databricks provides compute as needed as a serverless offering, and clusters are destroyed when not used.

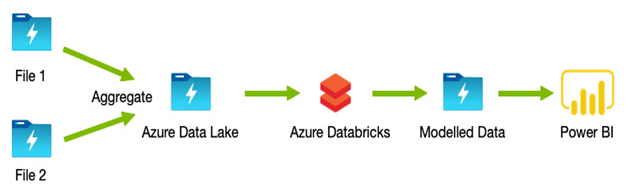

Notebooks can be executed from Azure Data Factory as part of a pipeline. In this way, you can perform any ingestion, transformation, and aggregation using Data copy or Data workflow activities, which will then output the result to storage or a database. The Azure Databricks notebook job can then be called, and this will read the processed data to perform other analytics or calculations, as in the following example:

Figure 13.7 – Using Databricks as part of an Azure Data Factory pipeline

Azure Databricks is not the only choice for analytics; Microsoft also offers Azure Synapse Analytics, which can be used independently or as part of a more extensive toolset.

Leave a Reply