Azure Data Factory is a serverless offering, meaning you only pay for it as you use it, enabling you to automate and simplify many steps in a data flow.

Much of Azure Data Factory’s power and flexibility is due to the many connectors that allow you to integrate with a wide range of linked services and tools.

Linked services can include storage accounts and databases, and these form datasets. Datasets are used to define source data—for example, comma-separated values (CSV) files or a database table. They are additionally used to describe output data, which can also be CSV files or database tables.

Azure Data Factory supports a wide range of services to create datasets from, including—but not limited to—the following:

- Amazon Redshift

- Amazon Simple Storage Service (S3)

- Azure Blob Storage

- Azure Cosmos DB

- ADLS (Gen1 and Gen2)

- Azure Database for MariaDB

- Azure SQL Database

- Microsoft Dynamics 365

- File Transfer Protocol (FTP)

- Google BigQuery

- Google Cloud Storage (GCS)

- Systems Applications and Products in Data Processing (SAP)

- Salesforce

- ServiceNow

Azure Data Factory allows you to use those datasets to create pipelines that define activities and perform a task, such as a simple copy or a transformation. Other services can be linked to achieving end-to-end data ingestion and transformation, such as the ones listed here:

- Azure Functions

- Azure Databricks

- Azure Batch

- Azure HDInsight

- Azure Machine Learning

- Power Query

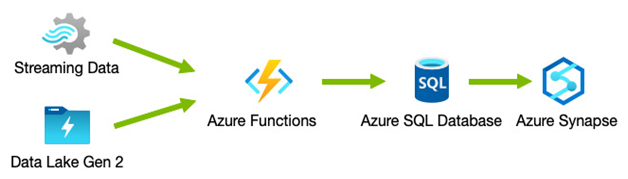

The following diagram shows a high-level example of this concept:

Figure 13.3 – Azure Data Factory pipelines

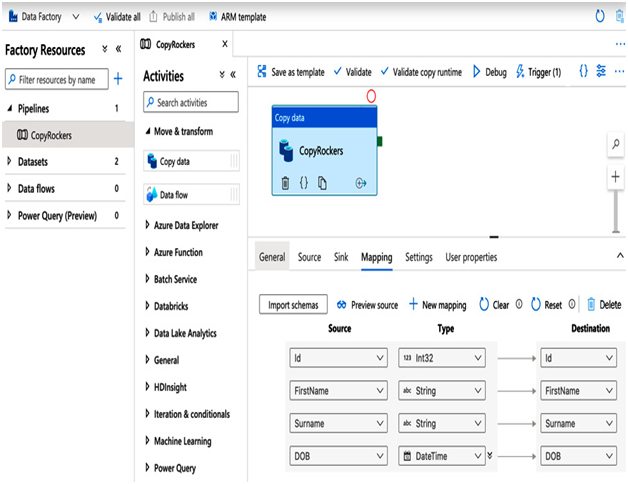

A simplest example would be a simple data copy from a raw data file in CSV format to an Azure SQL Database. Using the Copy data activity, you can set the source as a CSV file in a linked ADLS-enabled storage account, set the destination or sink as an Azure SQL Database, and then define specific mappings between the source and sink data types, as depicted in the following screenshot:

Figure 13.4 – An example Copy data pipeline

A pipeline can then either be run manually or you can create triggers that define when to run it. The triggers can either be configured to a set Schedule, or as a Tumbling window whereby a pipeline is run every x minutes, hours, or days, or in response to an event. A typical event might be a file being uploaded to a storage account. Such an event can be set to monitor a file pattern or a designated container in the storage account.

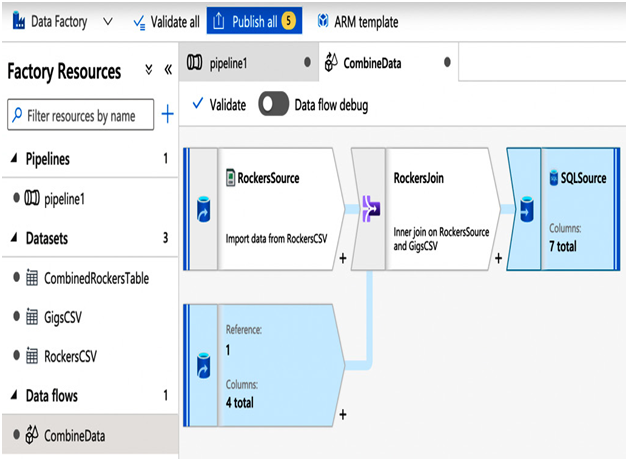

More advanced pipelines can be run; for example, a Data flow operation can be created rather than a simple Copy data operation. Data flow activities allow more complex activities to be performed. For instance, in the following screenshot, we read from two different CSV data files and combine the data from them using a related key. The output is then sent to an Azure SQL Database:

Figure 13.5 – Dataflow pipeline combining multiple sources into a single output

Some form of compute must perform activities. Azure Data Factory uses integration runtimes (IRs), and there are three different runtimes you can use.

Leave a Reply